My Big Fat Alpine Crash

It all started when I was testing Rookout on Docker (with Alpine and Python).

Rookout is a new approach to data collection and pipelining from live code. We basically allow developers to request any piece of data with just a few clicks and view it on their machine in any framework, cloud, and the environment they use.

So, there I was, testing our application when I realized that some commands were working just fine and some commands were being ignored.

The auto-restart destruction

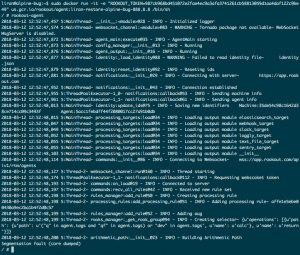

The situation seemed pretty odd. Why would the container respond to some of the commands and ignore others? I started with a standard SSH and ran few basic Docker test commands such as “docker ps” to see which containers were running.

I noticed that the agent restarted 139 times in quick succession (!), while it had been running only one second, which led me to believe it was constantly crashing.

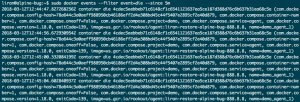

After running “docker event”, my suspicion was confirmed.

I immediately noticed that the ‘demo_agent_1’ was constantly dying when some commands were sent and that Docker was silently restarting the application. Now the question was: Why is the container dying?

Understanding the real issue: A memory problem

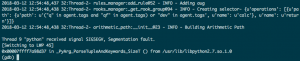

I ran “docker logs” but it wasn’t informative enough to shed light on my problem, so I decided to get inside the container to manually run the process.

This time, I got some additional information: A segmentation fault was crashing the process and causing the Docker container to exit.

There are two ways you could debug such Python crashes under Linux. Both provide similar results.

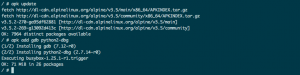

Option 1: Install GDB and execute Python under it

- We’ll start by installing GDB and the Python debug symbols:

$ apk add gdb python2-dbg

- Let’s run our script under GDB. Since GDB expects a binary and will not process bang lines, we must explicitly execute Python with the path to our script:

$ gdb -ex=run –args python /usr/bin/rookout-agent

- And now we have the debugger stopping the process on the signal

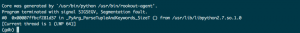

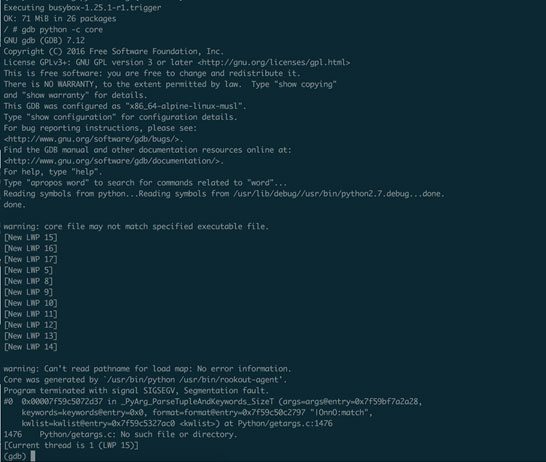

Option 2: Use GDB to open and investigate the crash dump:

- We start by installing GDB and the Python debug symbols:

$ apk add gdb python2-dbg

- Load the core dump of the Python process in GDB:

gdb python -c core

Note: If you can’t find a crash dump file, follow this link to make sure it’s generated and figure where it’s located

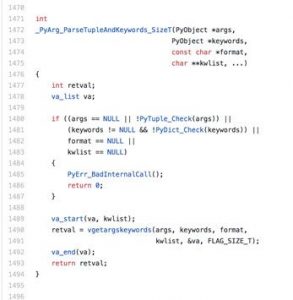

Whether we used live debugging or loaded the core dump file, we see exactly where the segfault is happening: Python/getargs.c:1476

Aha! moment: stack overflow

SEGFAULT is a Unix signal indicating an invalid memory access performed by our application. Invalid memory access is usually triggered by one of two conditions:

- Using an invalid pointer (for example: double free, use after free, uninitialized pointer). As Python does not allow us to directly access the process memory, this could only be caused by a bug in the underlying CPython implementation or by a native extension module.

- Using too much stack memory (commonly known as “Stack Overflow”. This is often caused by deep (or infinite) recursion**.

We know that the exact location of the invalid memory access from GDB. Let’s take a look at the code (GitHub):

You can clearly see that line 1476 is the function’s opening curly braces, where the stack initialization code occurs. This surely means we have a stack overflow 🙁

The root problem

But wait a second! If our stack is so short, how did it already reach an overflow? As you may know, Alpine Linux is based on msl libc to minimize resource usage (especially memory). If you dig around the web long enough, you’ll eventually come to realize that musl was originally designed for embedded systems, and one of its differences is a very small default stack size of 80KB. Python’s CPU & memory are hungry and were designed to use much larger stacks, even for very simple scripts.

The solution

Fortunately, threading.stack_size can be used in Python to increase the underlying OS stack size.

This is how I fixed it:

STACK_SIZE = 1024 * 1024

def execute():

stack_size(STACK_SIZE)

main = Main()

thread = Thread(target=main.execute, name=”main”)

thread.daemon = True

thread.start()

try:

while thread.isAlive():

thread.join(1)

except KeyboardInterrupt:

pass

When using this workaround, pay attention to the following caveats:

- Do it as early as possible, before importing any other modules. If these modules are using a significant stack on initialization they may crash, and if they create a new thread before you set the new size their threads will not get the bigger stack!

- Do not utilize the original main thread, as it does not have the new and improved stack 🙂

- In your original main thread, it is important to expect and process Unix signals, especially SIGINT, indicating ^C was pressed. It is up to you if to do an orderly cleanup or simply exit the process.

The happy ending

In the months between encountering this bug, working around it and publishing this blog post, the bug was fixed in the latest official Python Docker images and in the Python APKs for Alpine 3.7 and Python2. If you haven’t upgraded yet, here’s yet another reason to do so. If for some reason you cannot upgrade, feel free to use the above code snippet as a workaround.