Shifting Right in Software Development: Adapting Observability for a Seamless Development Experience

You’ve probably heard of the “shift-left” mantra as it echoes throughout the tech industry. And if you haven’t, let me be the first to update you that you’ve been living under a rock. Like a real rock, not even a figurative one.

In all seriousness, ‘shift-left’ has shaken things up quite a bit in the tech industry, bringing with it a paradigm shift in how we approach software development.

The shift-left approach aims to make it so that bugs and other issues are discovered and addressed early in the development process, leading to improved software quality and lower costs associated with late-stage troubleshooting. This has also somewhat shifted the burden of software quality, no longer confining it solely to the realm of QA teams. Developers, project managers, and even business stakeholders are now more involved in maintaining and enhancing the quality of the software, creating a sense of shared ownership and facilitating better organizational efficiency.

Despite the immense benefits of the shift-left approach, a fascinating counterpart is emerging in the industry – the shift-right. Today, engineers are spending an increasing amount of time developing and testing code in production-like environments. This movement towards the right, so to speak, has its implications. From navigating through the complexities of the development environment to troubleshooting software in production-ready stages, the challenges are different and often more complex. However, by integrating effective Observability tools throughout the SDLC, we can overcome these challenges and ensure smoother sailing.

Challenges in the Development Environment

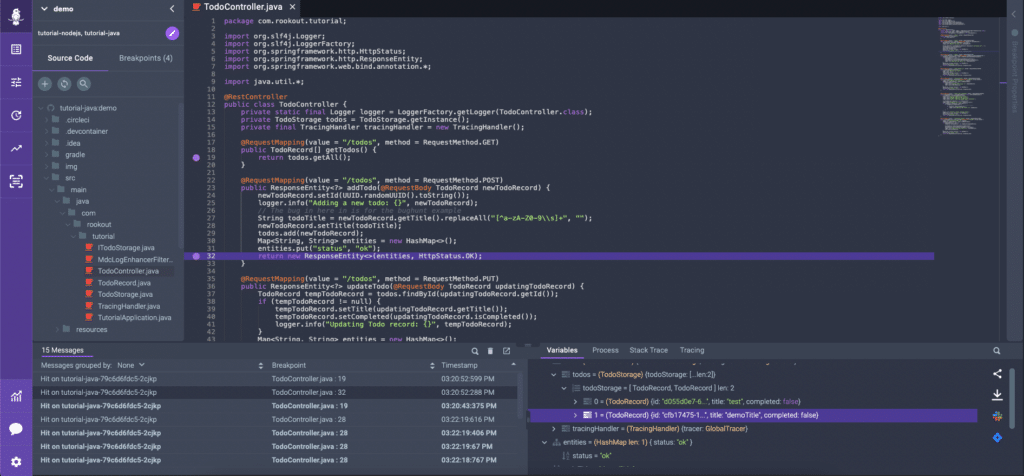

One of the biggest issues when shifting right is the increased complexity within the development environment. Developers, normally confined to the cocoon of the coding stage, are finding themselves thrust into production-like environments rife with intricate dependencies and broader-scale issues.

And let’s be real; these environments aren’t as forgiving as the sandbox playgrounds we’re accustomed to. They’re replete with real-world variables and anomalies, making debugging a complex endeavor, even for the bravest of developers. For developers, the familiar luxury of single-step debugging is replaced by multi-factor interactions, making it harder to identify the root cause of issues.

Navigating this environment requires a refined toolset. Developers need Observability tools that can deal with the intricate layers of production-like environments, illuminating their path through the dense forest of code. These tools need to be resilient and versatile, capable of providing insights throughout the software development lifecycle. This allows developers to examine the full lifecycle of code, from inception to execution, and efficiently troubleshoot any arising issues.

Challenges in Testing Environments

Testing is no longer just a standalone phase sandwiched somewhere in the middle of the development process. Instead, it’s making its way through the entire lifecycle. It’s like a surprise party guest popping up when you least expect it – only this time, it’s less about the surprise and more about ensuring that the software we’re crafting is as robust as possible.

The testing environment is becoming more real-world-like, more dynamic, and way more complex. It’s no longer just about testing isolated bits and pieces of code. It’s about seeing how the whole jigsaw fits together in a production-like setting. The goal? To create a mirror-like reflection of the final stage, allowing us to see the potential mishaps and fix them, hopefully, before they crash the party.

Why the sudden change in tune? Well, it’s simple. Modern software development is striving for software that’s not just great on paper but truly shines when it hits the real-world stage. This shakeup is a step in that direction, ensuring that development teams are not just building but building right. But as with all good things, this comes with a fresh set of challenges, especially when it comes to debugging and troubleshooting software. Thankfully, the right Observability tools are coming to the rescue, helping us keep pace and stay in sync with the new rhythm.

Challenges Launching New Code/Features

The cherry on top of software development is the actual launching of new code or features, akin to launching a rocket into space. Maybe in actuality, it’s not as dramatic, but it pretty much feels that way. It’s a big deal.

This stage is exciting but can also feel quite stressful. On one hand, you’re excited about the new possibilities and enhancements. On the other hand, well, we’ve all seen how many bugs can pop out when you least expect them to.

So how can we ensure a smooth release? Take it a step further and ask yourself how we can do that and minimize customer impact, too. The answer is pretty simple: get your team the proper dynamic Observability tools. With these, you’ll be able to illuminate your path through your code, giving you a clear view of your code’s performance in the real world, and allowing you to find and squash those pesky bugs before they cause any harm. You’ll be able to understand exactly what’s happening in your code at any given moment. It gives you a high-resolution, real-time view of your code as it runs. You know, like having x-ray vision, but for your code.

And one such tool that provides exactly these capabilities is Rookout. With Rookout, you can see what’s wrong, fix it, and get back to doing what you do best – creating awesome code and new features.

Smooth Sailing and Seamless Deployments

The shift-right in the software development lifecycle is a reality that can’t be ignored. It presents its challenges, from debugging in complex environments to troubleshooting software in real-world conditions. But it also brings us closer to the reality of our end-users, allowing us to deliver higher-quality software.

Observability tools are our allies here, equipping us with the means to navigate these intricate landscapes. They provide the necessary visibility into the SDLC, transforming the daunting task of debugging and software troubleshooting into a manageable – dare we say even enjoyable? – part of the development process.

So, while the industry is still humming the shift-left tune, it’s time we embrace this shift to the right. After all, it’s in these real-world, production-like environments that our software truly comes to life. And isn’t that what we, as developers, live for? The thrill of seeing our code in action, making a tangible difference in the world? That’s the magic of software development, and with the right tools, it’s magic we can master, regardless of where we are in the software development lifecycle.

If you’re interested in diving further into this topic, check out my full webinar with SD Times.