There’s no doubt — Devops and the sheer scale of the software it enables have truly revolutionized the world of software development. Evolving from humble single-server beginnings two decades ago, it’s finally reached the point where we can build elastic software at scale, thanks to cloud and orchestration layers such as IaaS, containers, k8s (Kubernetes), and serverless (FaaS).

New developments create new challenges. As developers try to keep pace with the ever-increasing complexity, speed, and scale of their software, they are realizing that they are the new bottleneck, still stuck working with the same development and debug tools from a simpler age.

A flood of new challenges

The most beloved characteristics of modern software, and those which make it so powerful — scale, speed, extreme modularity, and high distribution — are also the qualities that make developing and maintaining it so difficult. These new challenges can be roughly divided into three categories or levels: Complexity, connectivity, and development.

These categories are closely related: Often challenges in one category are solved by translating the problem to the next level up. This ‘challenge flow’ isn’t a straightforward vertical waterfall but is rather best envisioned as a cascading spiral. Just as complexity and connectivity spur development, development challenges lead to greater degrees of complexity, and so forth.

Complexity challenges

- Complexity challenges are a direct result of software solutions tackling more complex problems, making them the most obvious set of challenges. They include:

Developing distributed modular systems (aka microservices), which requires engineers to take time to plan how to break down, deploy and connect software.

- Developing scalable software. Engineers must consider what scale the solution will need to support. (Whatever happened to “this server supports up to X users?”)

- Handling data complexity in transit and at rest (usually coupled with “big data”). Modern software requires engineers to plan for extreme cases of throughput (high traffic, workloads), fast processing (real-time), storage, and search. What used to be a unique skill-set just a few years ago is now expected of every fullstack engineer (“You know Hadoop/Redis/Mongo/Kafka… right?”), raising the bar of most software projects. Saving your data as a .csv file or even a local SQL database is rarely an option anymore.

The skillset developers require to address these challenges is constantly growing, along with the time and energy that they need just to get started — to understand the problem space and assess how the available tools can be optimally applied .

Often when complexity issues become too great to manage, the problem escalates to become a next-level connectivity challenge, with complexity encapsulated in a new solution or method. Adding encapsulated software solutions such as databases, memcache, message-queues and ML engines in effect “delegates” the problem to be solved by the way we weave and orchestrate the overall solution. Once a specific pattern of complexity-to-connectivity escalation starts to be repeated frequently, it usually translates into a new standard development method or solution.

Connectivity challenges

Connectivity challenges result from the way modern software is woven together. There are at least three parallel connectivity chains:

- Interconnectivity – connectivity within the software solution, such as the connections between microservices or module

- External connectivity – connectivity with other software solutions including 3rd party servers, SDKs and SaaS

- Meta-connectivity – the configuration and orchestration layer used to build, deploy, and manipulate the software solution.

In even the simplest of layouts, each connectivity layer is comprised of dozens of elements , as well as multiple connections between the layers. Just keeping track of all the connections and data flows is a huge — even Sisyphean — management and architectural effort.

Now, consider that connections constantly change due to changing needs or as part of the architecture itself, as for load-balancing. Systems quickly reach a point where developers must invest many hours — even weeks in some cases — to understand what connects to what in the software solution they are working on. The days of “That box is connected to that box, and that’s the wire connecting them” are long gone.

We’ve already listed plenty of ‘frosting’ on the connectivity ‘cake’, but let’s not forget the cherry on top: security and compliance. Engineers are expected not only to understand all aspects of connectivity but to design, build, monitor and maintain them so that the overall solution is secure and meets all required standards. This is mind-bogglingly difficult when even the smallest defect in the smallest element can bring the entire house down. It’s like saying to the engineers, “You know that huge haystack you’re trying to pile up? Make sure all those pieces of hay connect just right, and while you’re at it, check for needles, too.”

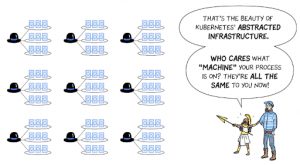

As time progresses (both within projects and in general) connectivity layouts grows beyond the ability of the human mind to keep track of, developers turn to the meta-connectivity layer, and try to automate and orchestrate connectivity (e.g. Puppet/Chef/Ansible, K8S, Istio, Helm, …). “You like code, right? So, here’s more code to orchestrate your code while you are writing code”.

When this modus operandi, often described as ‘configuration as code’ approaches maturity it transfers to the next level of challenges – development. And elevates the resolution of each challenge to a software solution in its own right.

Development challenges

Development challenges are the classic pains involved in producing functional software within a framework. These emerge from the growing gap between the power of modern software and developers’ ability (or lack thereof) to keep pace with it. Moreover, since all the challenges from the previous levels gradually propagate to this one, the challenges here are at the bleeding-edge of the dev/devops experience. These include:

- Dev environment challenges require developers to assemble, learn, and manage all tools and workflows chosen for the software solution being developed

- The sheer number of tools and methodologies is overwhelming and still rising, even after the huge growth of the past decade, to name just a few: IDEs, source-controls, compilers, transpilers, DBA tools, cloud consoles, development servers, debuggers, orchestrators, monitoring agents, task / ticket management, alerting solutions.

- Context switching makes matters worse. This ungodly pile of tools doesn’t hit developers just once, but continues to bombard them. Developers must always be ready to deal with a different situation. (“It was raining Git commits yesterday but today I’ve been waiting for hours for it to start snowing containers… Oh wait, it’s actually going to be a typhoon of tickets with a chance of tracing.”)

Today, for developers, “getting into the zone” is no longer a matter of efficiency; it’s essential for basic productivity. And that’s before we complicate the stack with AI, quantum-computing, biocomputing, and other miracles. (“If you think multithreaded programming is hard, I have some bad news for you…”)

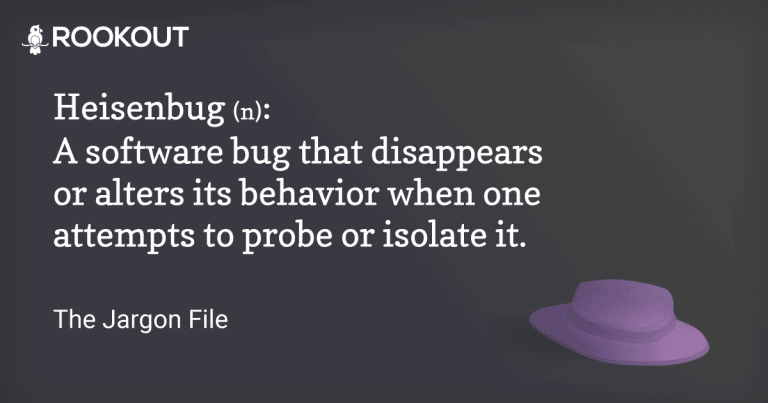

Replicating bugs

Often the first step toward understanding a bug is observing it. The most basic approach to observation is a replication of the bug in a controlled (usually local) environment. Sadly, with modern software, replication has become a herculean feat. The many challenges and factors mentioned above interact to create cases so complex and interconnected that any attempt to simulate them is doomed to fail, or break the budget trying. (“We think the issue was a result of rain in Spain, and a butterfly beating its wings… Alright, so let’s start by building a life-size model of Spain, and I’ll check Amazon for bulk butterfly shipments.”)

As in vitro bug replication becomes less of an option, in vivo observability becomes a must. Unfortunately, developers often discover that their ancient toolbox is ill-equipped for the task. The old tools simply don’t work in modern software environments; the reason usually comes down to loss of access.

- You can’t access the ephemeral – Multiple layers of encapsulation, the result of translating complexity challenges into connectivity, have made access an issue, as layers like containers, Kubernetes, and serverless take ownership of the software environment. The issue is clearest for serverless / FaaS: Not only do developers lack access to the server, but as far as they are concerned there is no server. Even if by some trick or hack one gains access to an underlying server through all the layers, it’s practically impossible to pinpoint a specific server/app since these fluctuate, controlled by orchestration layers, which constantly remove and add servers on the fly.

- Bringing an SSH knife to a K8S gunfight- With access lost, existing tools have become obsolete.

- SSH, which provided an all powerful direct command-line console, has become unusable in most cases and is at best, a huge stability risk.

- Traditional debuggers (in contrast to Rapid Production Debuggers) have nothing to attach to. More significantly, the idea of breaking (setting a breakpoint) on a server in production is unacceptably risky, since it is likely to literally BREAK production or at best, knock microservices and functions out of sync, removing any chance of replicating or debugging substantial issues in a distributed system.

- IDEs used to represent a developer’s full view of the system from code through compiling to building and running. They enabled developers to sync their views and approaches to software they share. IDEs were never very close to live systems, but the gap between them has grown so great that they can no longer create shared views. While integration to modern source control (e.g. Git) and CI/CD solutions helps, the gap is still large.

The CI/CD bottleneck

With access lost and few alternatives, if any, developers have turned to deploying code as the main way to achieve access. Any need to interact with software translates to writing more code, merging it, testing it, approving and of course, finally deploying it. This approach is leveraged for adding/removing features, getting observability (monitoring and investigating), and applying fixes and patches. This overwhelming dependency on code deployment creates a bottleneck as all these deployments fight for space in the pipeline and the attention of those who maintain it.

As the flow of challenges continues spiraling onwards, it gathers momentum. Today’s torrent is but a taste of the challenges the future holds. Once these challenges of modern software development are brought into focus, a clear picture emerges of a huge gap between the power of modern software and developers’ ability to keep pace with it. We see this observability gap most often in development challenges but in the other challenge levels as well. Bridging that gap is a key capability we require from modern dev and devops tools.

A bridge over troubled waters

Currently, only a handful of solutions is available to developers who face these challenges. Most developer tools have remained stagnant for years. An engineer from 20 years ago would be completely baffled by modern servers in the cloud but would recognize a current IDE or debugger in seconds. That said, the modern developer toolbox does have some new solutions. These include solutions designed to enable greater focus, solutions that attempt to do more with the limited available data, and solutions that try to bridge the observability gap by improving on the deployment cycle.

“Like a glove” – Tailored observability views

With many modern software challenges starting to repeat and consolidate into known forms and formats (such as Docker, K8S, Serverless functions) a new breed of tools has emerged that identifies these patterns and leverages their knowability to tailor specific solutions.

Within this category are next-generation APM solutions such as DataDog, which provide views built specifically for Containers and Kubernetes on top of existing APM offering. In fact, you’ll find that most APMs adjust and provide capabilities for the microservices world, although not always as first-class citizens.

In an even more modern tailored approach, we find solutions doubling down on structured data and specifically Tracing, such as HoneyComb.io (alongside open source projects such as Zipkin and Jager). These identify the specific pain of reconstructing a distributed system behavior, much as a detective reconstructs a crime scene, and arise from the very nature of microservice architectures.

For Serverless, we find solutions like Epsagon and Lumigo, which are tailored specifically to the FaaS use case and target specific pain points such as discovery, management, and pricing. These issues, of course, were present before, but became more acute with Serverless.

“Deus Ex Machina” – Advanced analysis

With software grown big, big data is not only a challenge but also a means of tackling the problem. Multiple solutions harness the strengths of machine learning to attain observability, and some use it as a primary approach. Examples include solutions like Coralogix and Anodot.

“Developers of the world, unite!” – Advanced workflows

Harnessing machines to solve machine problems is a start, but it isn’t a silver bullet.

As a result, many solutions focus on building a better developer workflow on top of the views and alerts provided by the automated part of the solution. Sentry.io is one great example and specifically with their recent release of Sentry 9, they improve the cooperative workflow on top of the existing exception management platform.

“Getting a taste of the real stuff” – Canary deployments

Most solutions reviewed here so far, and most solutions in the devops space in general, work by deriving value from data that exists in the system, or that can be collected in advance by focusing on a specific pattern (such as tracing).

While a significant step forward, these still leave us highly dependent on the CI/CD channel to iterate by delivering new data from production by updating log lines or SDK calls that feed the systems upstream.

One way to reduce pressure on the pipeline is by using canary deployments, a variant on green-blue deployments. They enable developers to expose new code (in this case, the necessary observability changes) to a limited percentage of production, without affecting all of production. It also allows faster rollbacks.

Recipes for canary deployments can be found for most leading CI/CD tools such as GitLab and CodeFresh.

Yet a huge gap still remains: What if the needed data isn’t within the limited canary percentage?

While canary deployments reduce the friction and risk of observing production, they still leave most of it on the table, making it an expensive and risky effort that most can’t afford.

“Bridging the gap with agility” – Rapid data collection

Despite the many solutions above, a gap remains between the observability data developers need from live systems and the pace at which they can iterate to collect and deliver that data to their various solutions (including some listed here). A new cohort of solutions attempts to bridge this gap by completely decoupling data collection from the CI/CD pipeline and thus eliminating the friction, risks, and bottleneck that create the gap.

These rapid solutions collect and drive data on demand without prior planning, creating evanescent bridges as needed, instead of trying to anticipate specific bridges for predictable cases. This ephemeral access approach aligns with the ephemeral nature of modern software. Rookout Rapid Debugging solution is an example which leverages instrumentation and opcode manipulation capabilities to enable developers to collect data from live environments and pipeline it to whichever platform they need.

A glimpse into the future

We’ve come a long way. Developers from the past would definitely envy the amazing accomplishments that enable modern software architectures. But when it comes to the tools we provide developers, we are not there yet. Devops has launched the world of software forward at tremendous speeds, but the complexity, connectivity, and development challenges left in its wake are preventing developers from keeping pace with their own software.

Now is the time to take another leap forward by embracing the new solutions quickly and actively working to bridge the observability gap. By more effectively connecting tools to one another, creating better workflows on top of them, and finding true agility in data collection and observability, we can bridge the gap and more. In the not-too-distant future, we can reach a point where the pace of software evolution is matched by software observability, creating a feedback loop that endlessly increases the speed of creation. A future where no one is left behind.

*Originally posted in hackernoon.