Announcing Smart Snapshots

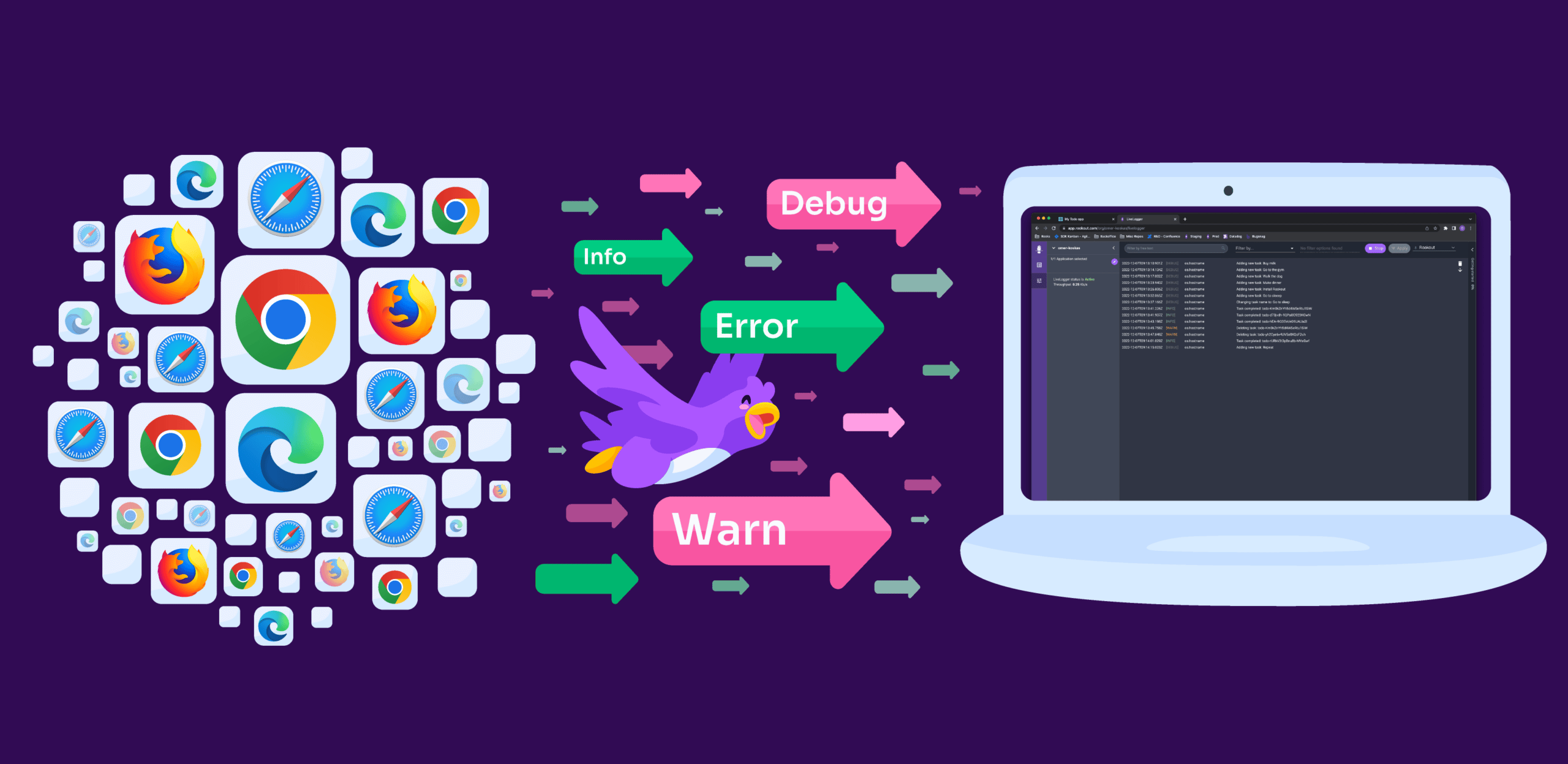

If you were to wake me up at 3 am and ask me how Rookout differs from logs (or other pillars of Observability, for that matter), my answer would have focused on the agile nature of live debugging. I would have explained that Non-Breaking Breakpoints empower you to decide in real-time what data you need.

Traditionally, you would use whatever logs (or metrics and spans) happen to be in the code and work backward from there. With Rookout, you get to choose what data you want with a click of a button and instantly get it. Whether investigating a production bug or exploring through the code, you can iterate at your leisure, asking questions and immediately getting answers.

However, as it turns out, that’s only the half of it.

Logging is not enough

With Rookout, you can instantly add new logs, metrics, and spans to every running application (they can also tweak log verbosity, but that’s a different story). While those are powerful tools and heavily employed by our users, we discovered they are nowhere near as popular as Live Debugging.

A bit shocked, we decided to dig deeper. Discussing this with our users, we relearned how challenging it is to write high-quality logs. It goes way beyond determining where to put them.

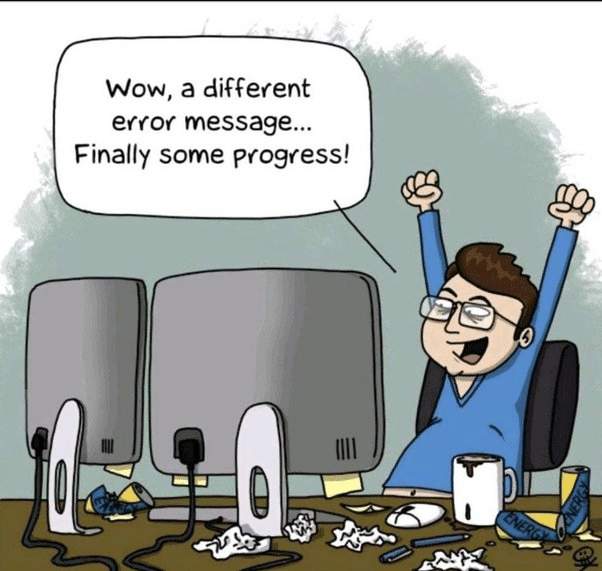

Take, for example, this logline:

Function foobar called with value: 5What was the input for foobar? It was `5`, right? Or was it `”5”`? In your language, is that the same as `’5’`? Would `5.0` also be the same? And that’s before we even discuss encoding, rounding, and other edge cases.

When trying to log non-primitive variables, things get even messier. More often than not, simply stringifying objects is rather useless. Shall you print specific attributes? Can you write all of them? What about non-primitive attributes? Do you want to recurse? How deep?

Don’t even get me started on collections.

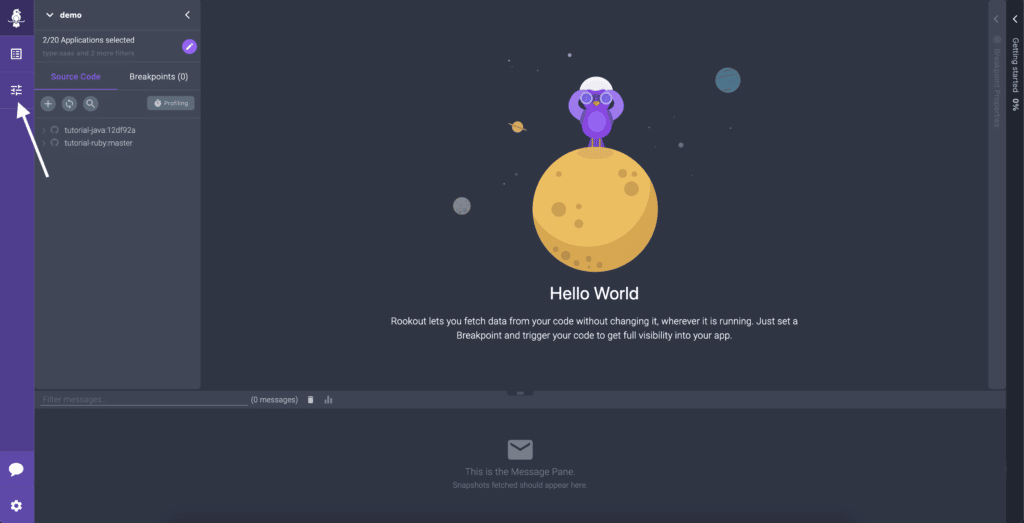

Getting all the data you need

Those challenges are why Live Debugging is so appealing. Just click on a line and get Snapshots of everything – local variable values, stack traces, global context, the works. Don’t worry about what exactly to collect or how to encode it. Rookout will accurately capture the data and even collect type information to boot.

So if Snapshots are such a powerful tool, don’t they deserve their own spotlight? Well, they do, which is why it’s becoming increasingly clear that Snapshots are the missing fourth pillar of Observability. They are much better suited to meet the needs of the developers.

After all, a Snapshot is worth a thousand log lines.

What’s new?

It’s cool to announce that Snapshots are the fourth pillar of Observability, but what does it matter to the individual contributors out there?

First and foremost, like Logs, Snapshots can and should be integral to developing software. As you write code, you often think about how to deal with obscure and bizarre edge cases that you don’t know how and if they can happen.

Traditionally, you would put a lackluster log line and hope you’ll deal with it when it happens. Fast forward, and you are often stuck with very little information to deal with the (un)expected issue.

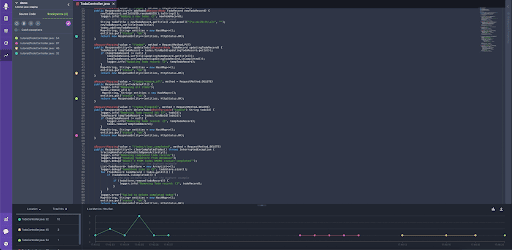

Instead, take a snapshot of the application state at that point in time. For example, in Node.js, it would look something like this:

const rookout = require(“rookout”);

rookout.start();

// Something went wrong here

rookout.snapshot();Do that, and the next time you’ll deal with an unexpected input, invalid calculation result, or corrupted internal data structure, the Snapshot will be available before you even know something went wrong.

Even better, thanks to the dynamic nature of Rookout, you will be able to customize what data is being collected, set additional filters/conditions, or even disable the snapshot outright without having to change your code in any way.

Why Smart Snapshots?

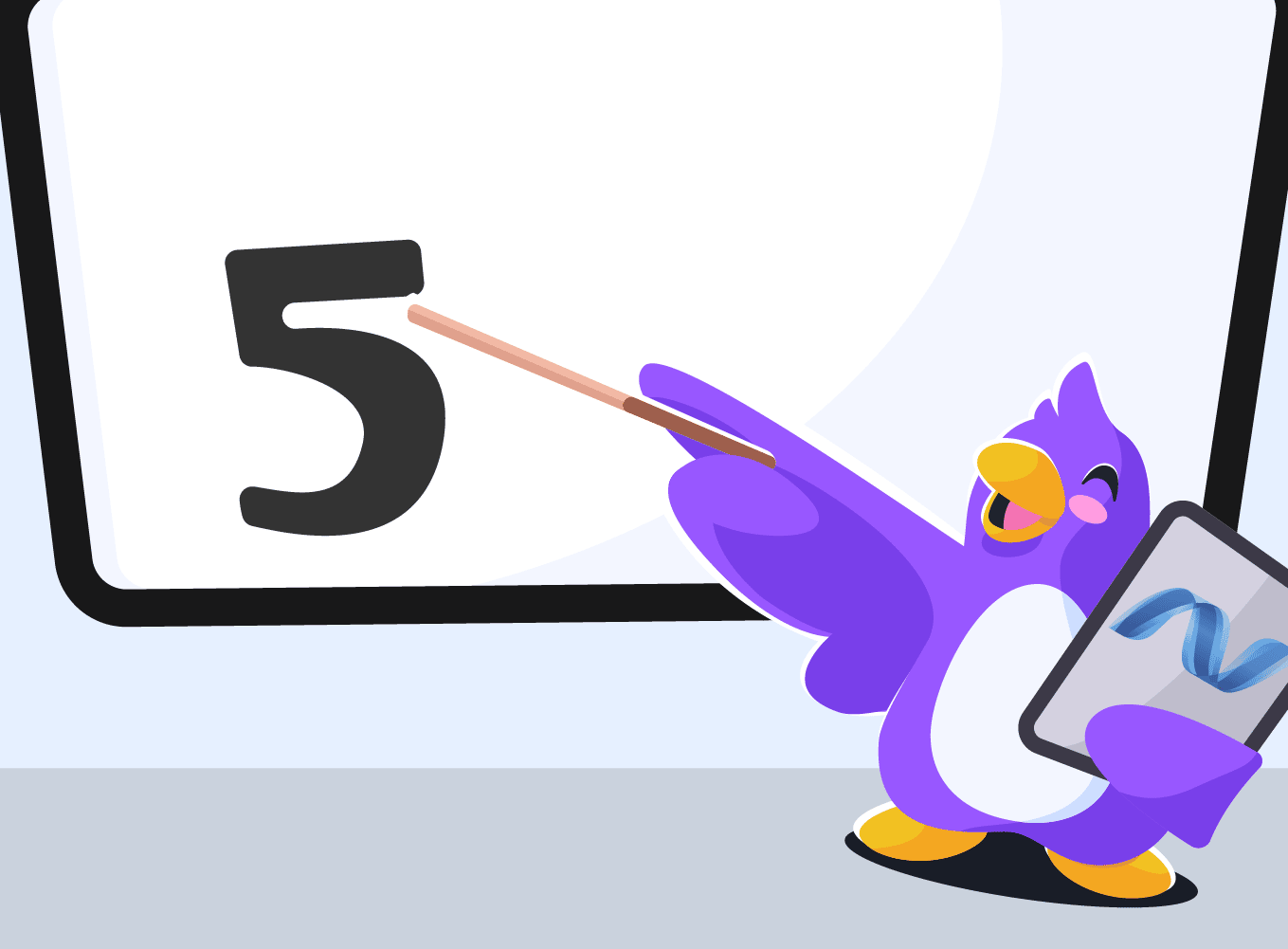

Giving you the power to embed Snapshots in your code is only the beginning. We also want your applications to identify those edge cases and take those Snapshots proactively without you even thinking about it.

The first use case that we are releasing is assert tracking for tests. Stop wasting time and effort setting up and re-running tests locally. Even better, stop worrying about tests (or test failures) that are hard to reproduce locally. Rookout will automatically enrich those annoying ‘Assert failed 1 != 0’ messages with high-quality Snapshots that explain what went wrong to make troubleshooting a breeze.

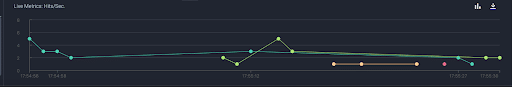

We already have support for JUnit and Jest, and this is what it looks like:

What’s next?

Our team is bursting with ideas on when taking Snapshots automatically would be the most useful.

But even more important, we would love to hear from you! In which cases will Snapshots provide you with the most value? Talk to us.