A Developer’s Adventures Through Time And Space

The craziest thing happened to me during this holiday season! It may have been all of the magic in the air (or the extra partying), but one day, just before Christmas, I suddenly realized I can travel through time. Of course, the first attempt I made was to go back and prevent several historical events from happening. But alas, it turned out I could only travel through my own personal timeline. I’ve decided the best way to use my newly-discovered superpower is to go back and tell various past versions of myself that in 2020 – the future is bright! Without further ado, here’s what unfolded next.

Back to the past: junior-dev-me

The first thing I did was go back in time to meet junior-dev-me. When I arrived, I found him working on LoadRunner, an on-premise app deployed with thousands of enterprise customers worldwide. I interrupted him in the middle of a code review session, just as he was working on a new feature. The feature will be deployed with customers two months into his future, just as the new release is launched, or maybe three to six months after that when the users choose to download and install the latest release.

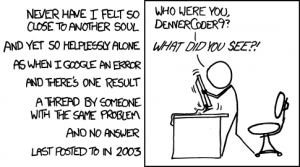

All in all, it’ll be a few months until he starts getting user feedback. It will also be a few months before he starts measuring adoption and hearing about what works and what doesn’t. Much of the code review session was pure guesswork. What’s going to happen? Where will we require log lines? What exceptions do we need to handle? What happens when we decide to measure new stuff?

Another cycle of adding log lines means releasing a hotfix, sending a patched up dll, or waiting for another release. The feedback loop means another 2-3 months of waiting for data. So the junior-dev-me is investing even more time in adding logs, to save himself from wanting to go back in time later on. He spends entire code review sessions worrying about what data future-him would have wanted to go back in time and add. “Time keeps on slippin’…”

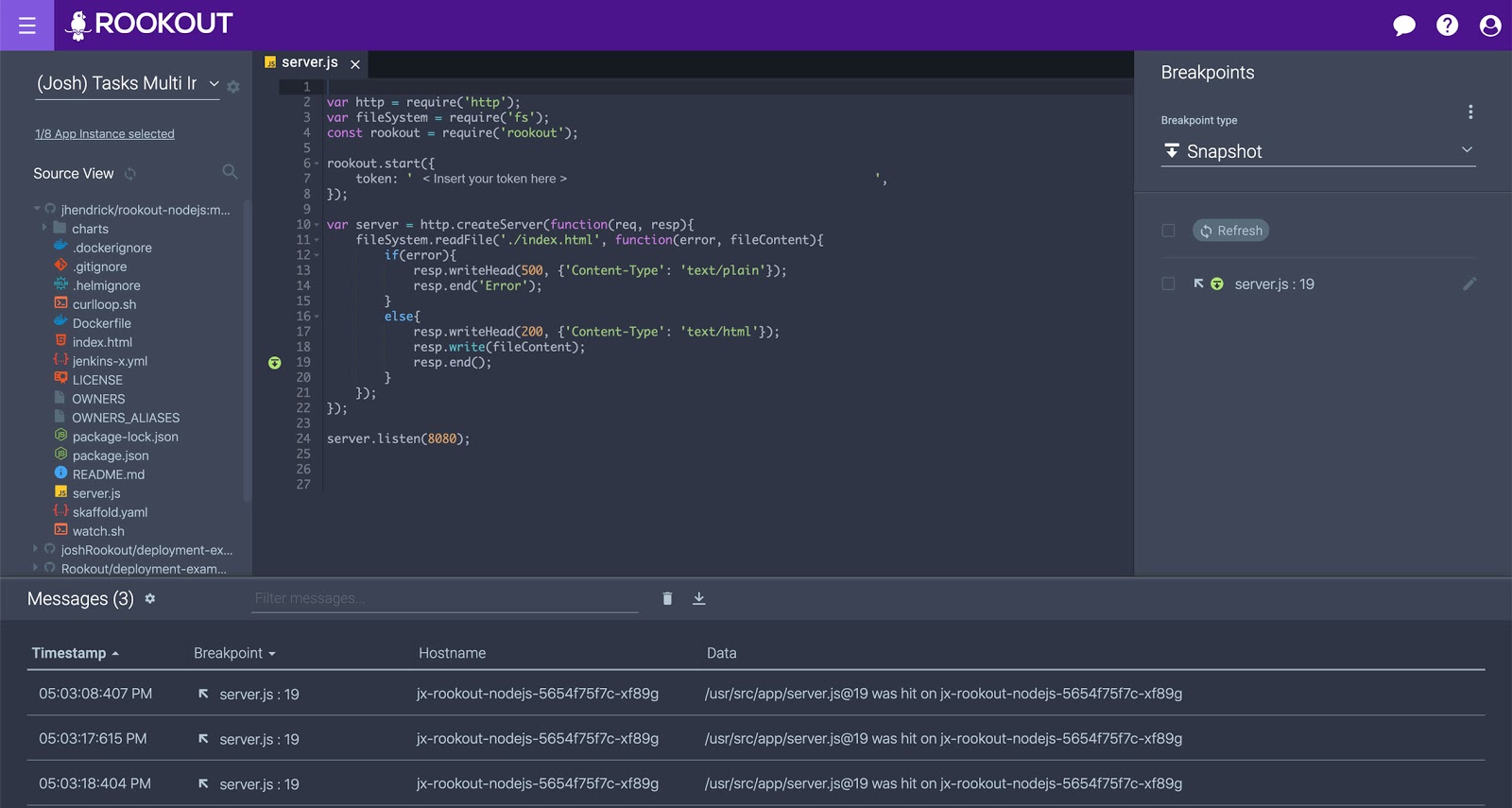

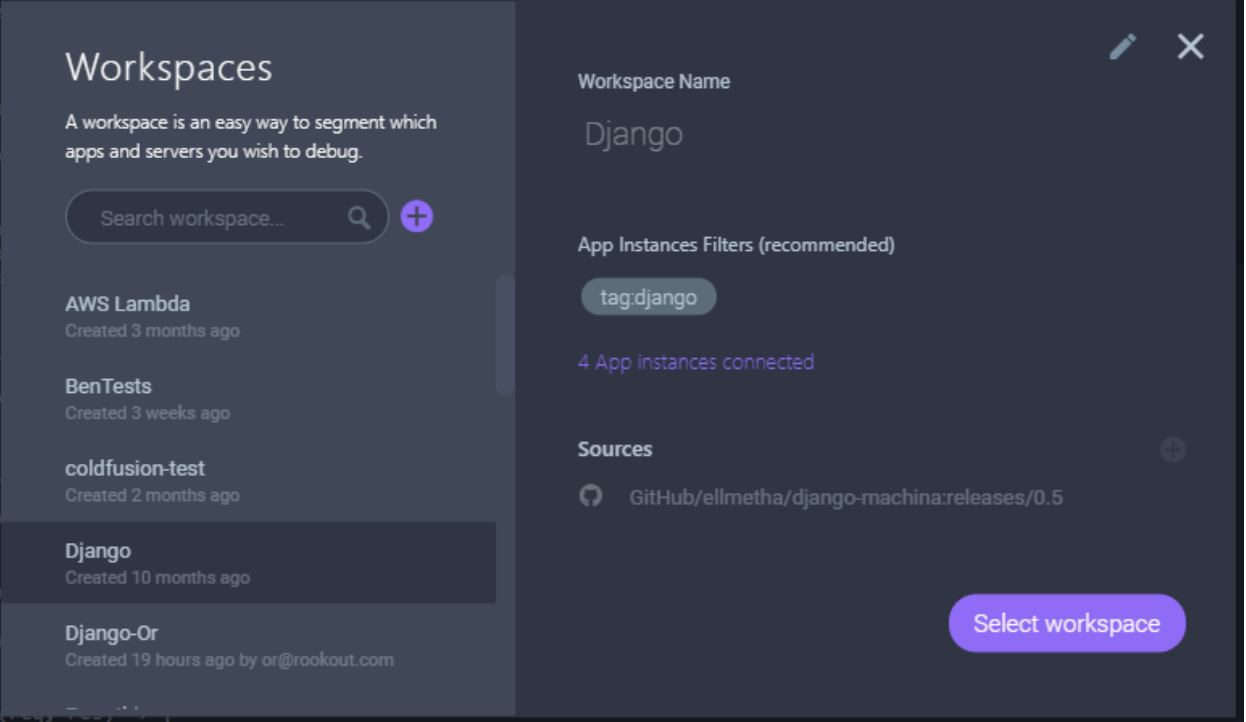

My heart went out to junior-dev-me. I introduced myself and told him that in the future, everything is going to be OK. In 2020, there’s a technology that lets you fetch data and add log lines on the fly, without changing code or waiting for a release. It works even if your app is on-premise, deployed half the world away in space, and two releases away in time. He looked impressed and then asked me who the president of the U.S. is in my time. I said never mind all that because if I had told him he wouldn’t believe me anyway. We had coffee together (some things never change over time, like how I like my coffee) and then, I went back to the future.

Pay it forward: senior-dev-me

My time-journey continued and I moved forward on the timeline to meet senior-dev-me. When I arrived, senior-dev-me was working on StormRunner, a SaaS, cloud-native app deployed in AWS EC2. I interrupted him in the middle of a design review session. He was designing a new architecture that would have to scale as an increasing number of customers required an increasing number of EC2 instances to be deployed on the fly.

Senior-dev-me was trying to figure out several things simultaneously. What scale would it have to reach? How many concurrent users can we expect to invoke this new API and what would the fail-rate be when we request such a batch deployment from AWS? If only he had some data about what customers are currently doing with the tool, and what responses we are getting from AWS.

Fetching more data means adding code, and adding code means waiting for a release. Sure, the release rate is quicker in SaaS. Instead of waiting 2-3 months, he may just need to wait 2-3 weeks. And if he needs the data urgently, he can always push a hotfix; yet even pushing a hotfix takes time, space, and effort. He has to plan it, write the code, test it, get in queue for a code review and a pull request, then gradually roll out. The cloud makes the app feel closer than on-premise apps, but it’s still far away. So senior-dev-me spends his time in design review sessions worrying if fetching more data that feels far away is even worth the effort. He makes an educated guess and hopes that future-him won’t be too disappointed when he makes the wrong one.

But I’ve never caught a glimpse…

Time may change me

But I can’t trace time…

Turn and face the strange changes”

I told senior-dev-me that even though things seem confusing now, in 2020, a new platform will allow him to fetch data and add log lines on the fly, without changing code or waiting for a release. All this magic happens even if his app is a cloud-native SaaS deployed in a faraway AWS farm and a hotfix away in time. He smiled and asked me if the transition to Node.js and Angular 2.0 was worth the hassle. I said, “Don’t worry about it and don’t get too attached to that new IDE you just switched to.” We had a beer together (some things never change over time, like how I like my beer) and then I went back to the future.

Back to the not-so-distant past: product-owner-me

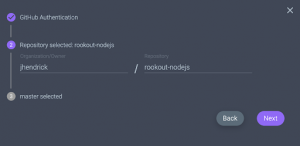

My next stop was a bit closer to my present whereabouts. This time around, I came to see the product-owner-me. When I got there, product-owner-me was working on a Kubernetes-based microservice app deployed in GCP. I interrupted him in the middle of a feature rollout session. The latest feature he designed had already been exposed to internal users on the ‘dogfood’ env, and to a couple of beta-friendly, early-adopting design partners. Many questions were now bothering him. What should the next step be? Do we expose it to everyone? Or do we do a gradual rollout, customer by customer? How will we know if they saw the new feature and if they liked what they saw? How quickly can we close the feedback loop and improve what needs to be improved?

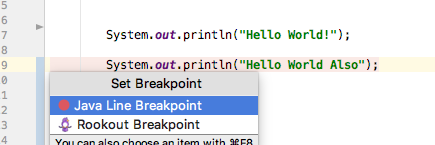

He does have some data from the measurements he had built into the feature before starting the rollout. However, the feedback he’s already received means new s*$t has come to life, and maybe he needs to bake in another couple of log lines and report another couple of metrics. Does he hold the rollout until these metrics are added? Does he prioritize adding these metrics before, or after exposing this feature? Does he roll out the feature with fear and ignorance or with courage and data?

As the product owner, he always does his best at defining the data needed to measure prior to starting work on the new feature. As the product owner, he is also always hungry for more data. Yet each added metric makes the feature more expensive since it means writing more code. It involves a longer code review cycle, a longer design cycle, and a longer dev and test cycle. So he spends his feature rollout session trying to balance effort with data, space with time.

Looks like I’ll die alone

-If only you’d purchased a better cell phone

-And now I’ll never know who wins Game of Thrones

Oh, bad things are bad

I told product-owner-me that all of his hard work is going to be worth it. In the not-so-distant future, in 2020, Rookout lets you fetch data and add metrics on the fly, without changing code or waiting for a release. Even if your app is a Kubernetes-based microservices app, constantly changing and evolving. He smiled and asked who’s won the Game of Thrones. I told him not to get too attached to anyone on the show or to the show itself, for that matter. Also, don’t hold your breath for the final book to be finished any time soon. Then, we had a krembo together (some things never change over time, like how I am compelled to follow the rule of three) and I went back to the present.

There’s no time like the present

Just as I got back to the present, and right in time to unwrap my holiday presents, I ran into future-me. He went back in time to tell present-time-me to stop trying to distract myself from writing that new-year’s-themed blog post by imagining I could time travel. Well, I didn’t like his patronizing tone, so I told future-me to stop nagging. After all, I have my own process and my own pace. Stop telling me that things will be better in the future, I’ve got my own stuff to worry about. Ugh, I mean, who does that? People in the future have no manners. 😉

✨Happy Holidays and a Happy 2020 to you all! ❄️